Generative Models

Gigapixel has generative AI models that produce outstanding results for unique enhancements on small images. If a ⚠️ Large image warning appears, you might need to crop or resample the image into smaller pixel dimension to avoid processing errors.

System Requirements

Generative models have higher requirements for processing than the core models.

- Generative models other than Standard Max require 8GB of VRAM with a NVIDIA or AMD GPU. Standard Max only requires 6 GB of VRAM with a NVIDIA or AMD GPU.

- 24GB+ of system RAM is required for ARM-based systems.

- macOS 14 or newer is required for all generative models

- 16 GB of RAM or more is required for all generative models

Wonder

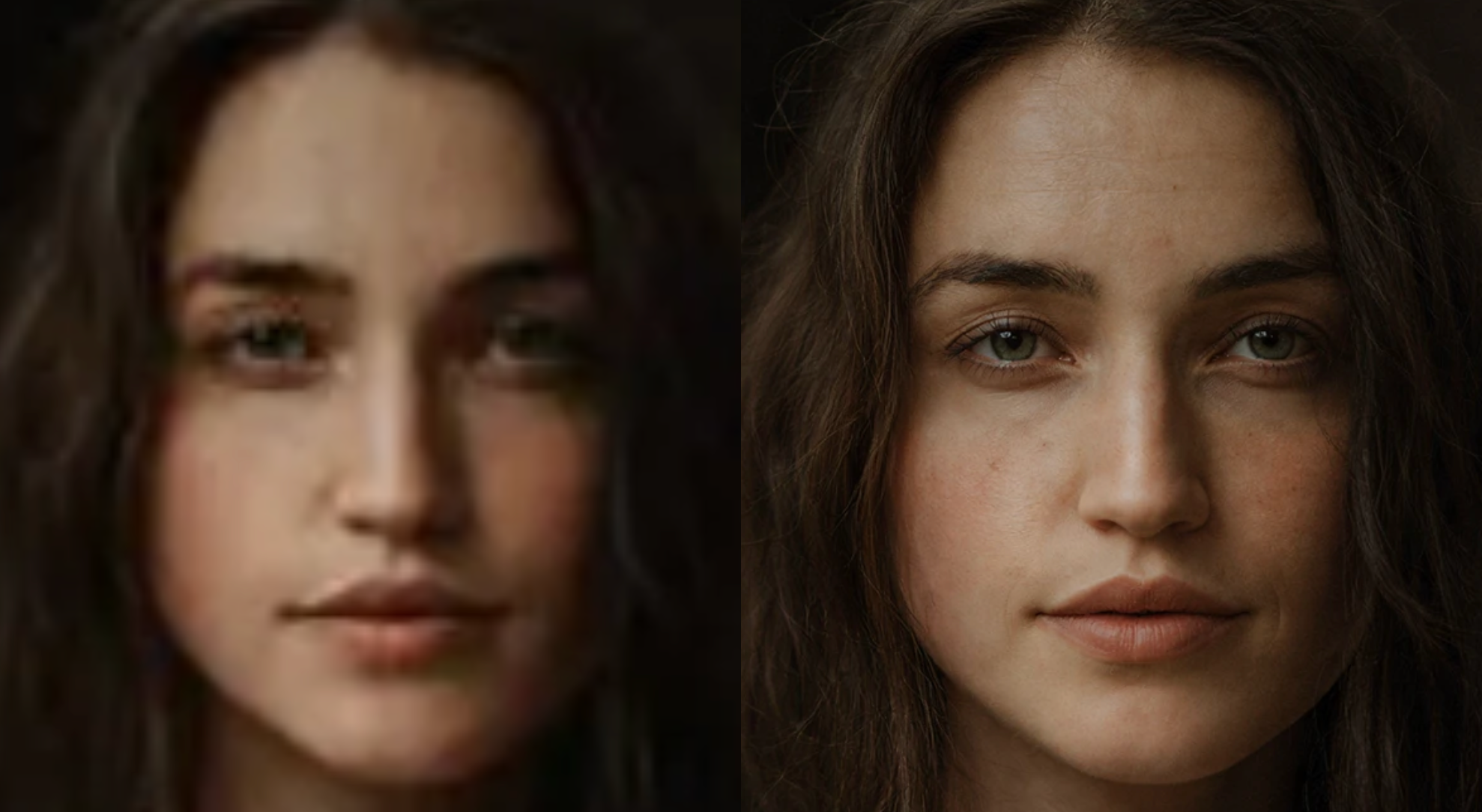

Wonder 1 is designed for low-resolution photos that include small faces, heavy noise, or blurred backgrounds. It can produce clean, natural results, with fewer artifacts and less oversharpening. It’s especially effective on old images, social media, or pixelated images. While it can sometimes smooth out fine textures or struggle with dark areas, Wonder balances sharpness and realism to deliver a true upscale rather than an artificial interpretation.

Wonder 2 enhances your low-res images without producing over-sharpened, plastic outputs. Get high-accuracy results for intricate features in faces, textiles, and more. The one-step model upscales, denoises, sharpens, and improves lighting without stacking different enhancements.

Use Wonder 2 if your goal is to:

- Restore fine facial and texture detail

- Recover text, logos, and signage

- Reduce artificial-looking patterns

- Minimize generative artifacts

Do not use Wonder 2 if your image suffers from false resolution, noise & grain, or is low-light with artifacts.

Standard Max

Standard Max is built for speed and efficiency, delivering results on a wide range of images while using less system resources. Acting as a hybrid between Standard v2 and Recover v2, it provides a quick and simple workflow featuring a Strength slider for natural blending.

This large image was cropped for use with Standard Max.

Standard Max produces improved enhancements for details - generating fine, realistic textures that help bring your photos back to life.

Recover

Recover v3 is built for users who want soft, realistic enhancement rather than aggressive detail reconstruction. It shines especially with:

- Fur and feathers

- Natural textures that benefit from subtle recovery

Choose a strength mode (Low/Med/High) - the app defaults to the Medium option for balanced enhancement. The strength mode controls the intensity of the detail enhanced.

Optimized for speed, Recover v2 is faster than Recover v1. This model brings the best upscaling fidelity for old and low quality photos.

Pre-downscaling

An action for pre-downscaling will improve working with larger images that suffer from false resolution. These images tend to be low information dense—where pixel dimensions have increased without a meaningful addition of detail. Pre-downscaling addresses this by intelligently optimizing image input before enhancement.

How it works:

- Pre-downscaling resamples the image to concentrate image density

- The result is then upscaled using AI to generate natural results

- Choose between three levels of downscale intensity

When to use Pre-downscaling:

- When images exceed 1000px on both sides

- Ideal for old JPEGs, scanned photos, or poorly upscaled content

- Select None if you prefer to skip pre-downscaling

Redefine BETA

For use with low quality or AI generated images, use Redefine to add definition and detail. You can prioritize upscaling for either realistic fidelity or creative distinction.

Redefine realistic

Choose between None or Subtle to set the level of adjustment that will be applied.

Selecting Subtle will enable the option for directing the adjustment using the Image description.

Redefine creative

Choose between Low, Medium, High, or Max to set the level of adjustment that will be applied.

Use the Image description to direct the changes that will be applied to the image.

Adjust the Texture slider to refine the results.

- Creativity - Low, Medium, High, Max

- Texture - adjust the level of detail that will be generated

- Image description - Utilize this if you want to be specific about the details you're looking for. The model responds to a descriptive statement versus a directive one. For example, use the phrase "girl with red hair and blue eyes" instead of "change the girl's hair to red and make her eyes blue"

Image Description

Check out the Community pages for tips on writing image descriptions from other users.

Image descriptions are unique to each individual image and cannot be batched.

Workflow

Since generative models are intended for small images, some users desire larger results than what their system or the cloud is able to process and export.

Here is the recommended multi-pass workflow for getting more resolution out of images used with generative models.

- Resize your source image to fit within generative model recommendations.

The⚠️ Large imagewarning can indicate when resampling is needed

Using pre-downscaling with Recover V2 can also work - Upscale with a generative model with a scale factor of 1-4x.

The scale factor is based on what your system is capable of rendering - Export the result of that generative model, creating a new file.

You can render locally if your system is capable or use Cloud render - Import that resulting file into Gigapixel selecting a core model for additional upscaling with a larger scale factor.

We recommend Auto mode for model and setting selection